If Prometheus was worthy of the wrath of Heaven for kindling the first fire upon earth, how ought all the Gods honor the men who make it their professional business to put it out? – John Godrey Saxe

If Prometheus was worthy of the wrath of Heaven for kindling the first fire upon earth, how ought all the Gods honor the men who make it their professional business to put it out? – John Godrey Saxe

Sooner or later any seasoned software developer, programmer or project manager finds themselves in firefighting mode – where it’s a matter of putting out a fire so that it doesn’t spread to other things, taking a project down. Some people think that the ‘fingers in the dyke’ are a more appropriate metaphore – and at times that is true – but I’m writing about firefighting today.

Getting out of firefighting mode is relatively easy. Like fire, there are certain things that are needed to create the conditions for the fire in the first place. A fire needs fuel, oxygen and a source of ignition.

Oxygen is easy in a software project – it’s a requirement for the software project to start and survive in the first place: users.

The fuel, all too often, results from poor software and system administration practices and poor management decisions.

Like fire, the ignition sources can be many. It can be the fuel rubbing together to form the friction (bad decisions culminating into a burst of flame), or a visiting pyromaniac (someone taking advantage of an exploit), or enough oxygen (users) blowing over a hot spot (friction) that causes a burst of flame.

The only way to get out of firefighting mode with a software project is to deal with the cause of the fires. Sure, putting out the fires is important, but sometimes you can’t put them out fast enough – and unless you treat the underlying cause, the fire will gut the project.

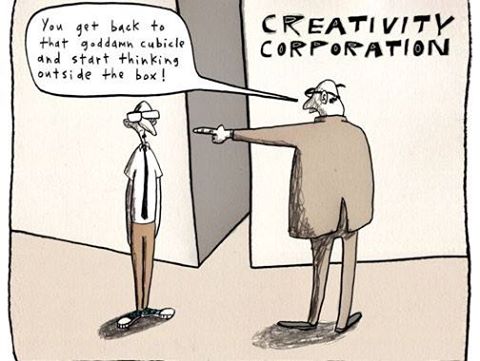

Almost all the time, the people who have been fighting the fires have opinions on what is causing all the fires – and they typically have a plan to tackle them, if only they had time or management approval. Despite our best efforts in Hollywood, time travel is not yet viable – and that leaves us with management decisions. Management decisions over a period of time become company culture. A culture that is derisive of good software project management is almost impossible to change unless something drastic happens.

About 15 years ago, I came up with a phrase that seems to be pretty accurate since: Management doesn’t smell the fire until the flames are licking their posteriors. Until they understand that there is a problem that needs to be solved, they will not try to solve it. The time can vary depending on how much attention they are giving to the issues and, where the real problem usually is, how much they trust the people that they hired to talk to warn them about these things.

If you can’t solve the management/leadership problem, you’re sunk. You’re done. Cash in your paychecks and update your resume.

If you can get management to see the need for change – probably with the help of someone in finance who will point out how much money is being spent fighting fires – the only tried and true way to get out of firefighting mode is:

(1) Take a hard look at whether the project is dead or not. If you’re so bogged down in maintenance mode, it’s time to figure out what Plan B is. If not…

(2) Start implementing best practices, from source control to documentation of the project itself. When there are too many things going on, find a finite area to begin controlling and start controlling it. It’s an uphill battle but it can be done with management making the path clear.

(3) Draw lines in the sand. If fires don’t meet certain criteria you set – don’t fix them. This is triage and has to be decided with the overall project in mind.

Image at top courtesy U.S. Fish and Wildlife Service, Southeast Region, and made available under this Creative Commons License.