Every morning I set aside my morning coffee to travel the world through my mobile phone as if it were a spaceship, hermetically sealed. I peer through the window as I travel from point to point I find of interest that morning. One moment I’m checking on my friends in Ukraine inobtrusively, keenly aware that there’s more spin than the media frenzy of a hurricane hitting the United States.

Every morning I set aside my morning coffee to travel the world through my mobile phone as if it were a spaceship, hermetically sealed. I peer through the window as I travel from point to point I find of interest that morning. One moment I’m checking on my friends in Ukraine inobtrusively, keenly aware that there’s more spin than the media frenzy of a hurricane hitting the United States.

The next moment, I’ll visit friends and family throughout the world as I read their Facebook posts. Then I’ll look at what the talking heads of tech think is important enough to hype, then I’ll deep dive into a few of them to discreetly consider most of it nonsense. I am a weathered traveler of space and time, and the Internet my generation extended around the world has not been wasted on me. I am a tourist of tourists, as are we all. It’s what we humans do.

When people take those all inclusive vacations to resorts, they get to see a sanitized version of the country they are visiting that doesn’t reflect the reality of that country. You’ll hear people talking about when they visited this place or that, and how wonderful this or that was – you’ll rarely hear what was wrong with the country because… well, that would be bad for tourism, and tourism is about selling a dream to people who want to dream, much like politics, but with a more direct impact on revenue that politicians can waste.

Media, and by extension, social media, are much the same. We see what’s ‘in the frame’ of the snapshot we are giving, and that framing makes us draw conclusions about a place. A culture. A religion, or not. An event. A person.

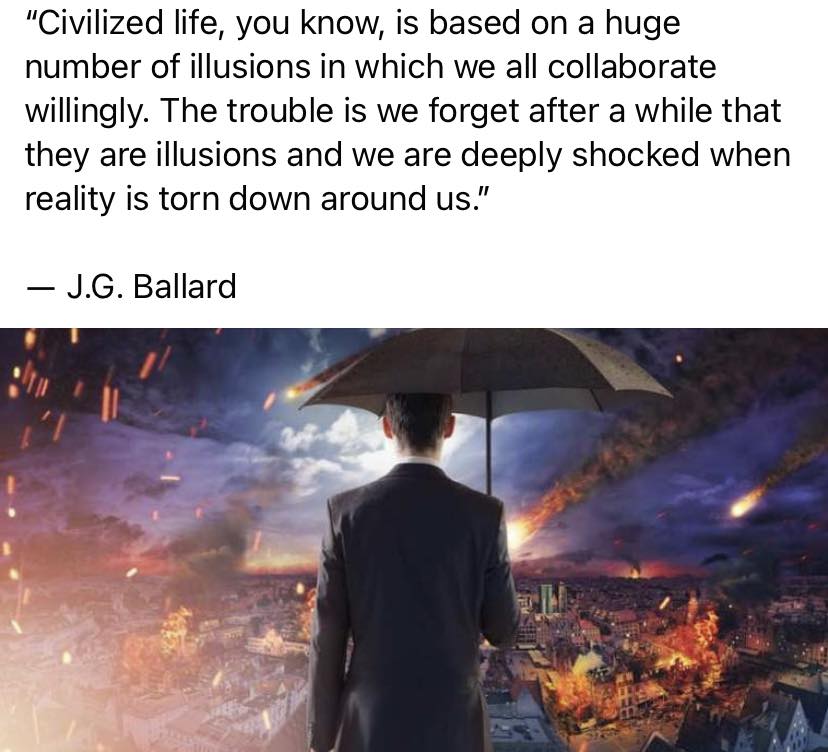

Some of us believe that we’re seeing everything clearly, as in the image at the top of this post. You can look at any point in the picture and see detail, but that’s not how we really see it, and therefore, in our mind, it’s not the way it is. What we see is subject to the ‘red dots’ I wrote of, things looking for our attention directed consciously by someone else (marketing/advertising) and by subconsciously by our own biases.

The reality of our experiences is usually more like something to the right. Our focus is drawn by red dots and biases, and in the periphery other things are there, poorly defined. This example is purely visual. And because we generally like what we see, there’s generally a positive emotion with what we see that reinforces wanting to see it again.

The reality of our experiences is usually more like something to the right. Our focus is drawn by red dots and biases, and in the periphery other things are there, poorly defined. This example is purely visual. And because we generally like what we see, there’s generally a positive emotion with what we see that reinforces wanting to see it again.

This is not new, and it can be good and bad. These days an argument could be made that the red dots of society have run amok.

A group of really smart people with really good intentions created a system that connects human experiences across the planet in a way that is significantly faster than before. Some of our ancestors could not send a message around the world within their lifetime, and here are presently discussing milliseconds to nanoseconds as if we even would notice a millisecond passing ourselves. Our framing was simpler before, we didn’t have nearly as significant a communicating global network back then. Technologies that spread things faster range from the wheel to sailing to flight to the Internet, in broad strokes. As Douglas Adams would write, “Startling advances in twig technology…”

However we got here, here we are.

However we got here, here we are.

If one group has a blue focus, another purple, another yellow, we get overlaps in framing and the immediate effect has been for everyone to go off in their corners and discuss all that is blue, purple and yellow respectively.

An optimist might say that eventually, the groups will recognize the overlaps in the framing and maybe do a bit better at communicating, but it doesn’t seem like we’re very near that yet. A pessimist will say that it will never happen, and the realist will have to decide the way forward.

I’m of the opinion that it’s our duty as humans to work toward increasing the size of our frames as much as possible so that we have a better understanding of what’s going on within our frame. I don’t know that I’m right, and I don’t know that I’m wrong. If I cited history, the victories would be few that way – there’s always some domination that seems to happen. Personally, I don’t see any really dominant perspective, just a bunch of polarized frames throwing rocks at each other from a distance.

We’ll get so wrapped up in things that we forget sometimes that there’s room for more than one perspective, as difficult as it may be for people to understand. We’ll forget our small knowledge of someone else’s frame does not define their frame, but defines our frame. We forget that we’re just tourists of frames, we visit as long as we wish but do not actually live in a different frame.

Sounds messy? You bet. And all of that mess is being used to train large language models. Could it homogenize things? Should it? I am fairly certain we’re not ready for that conversation, but like talking about puberty and sex with a teenager… we do seem a bit late on the subject.

I’m just a cybertourist visiting your prison, as you visit mine. Please don’t look under the carpet.

One of the things that has bothered me most about ChatGPT is that it’s data was scraped from the Internet, where a fair amount of writing I have done resides. It would be hubris to think that what I wrote is so awesome that it could be ‘stealing’ from me, but it would also be idiotic to think that content ChatGPT produces isn’t derivative in a legal sense. In a world almost critically defined by self-preservation, I think we all should know where the line is. We don’t, really, but we should.

One of the things that has bothered me most about ChatGPT is that it’s data was scraped from the Internet, where a fair amount of writing I have done resides. It would be hubris to think that what I wrote is so awesome that it could be ‘stealing’ from me, but it would also be idiotic to think that content ChatGPT produces isn’t derivative in a legal sense. In a world almost critically defined by self-preservation, I think we all should know where the line is. We don’t, really, but we should. When I’m out and about, I make connections with people I see regularly. Recently, I encountered a security guard I know who works regularly at a local mall, and he and I paused to chat. He generally has a ‘new and improved way’ to get out of his job, where he makes about the equivalent of $2 US an hour.

When I’m out and about, I make connections with people I see regularly. Recently, I encountered a security guard I know who works regularly at a local mall, and he and I paused to chat. He generally has a ‘new and improved way’ to get out of his job, where he makes about the equivalent of $2 US an hour. Most of the people around me are completely unaware of the

Most of the people around me are completely unaware of the

Once upon a time as a Navy Corpsman in the former Naval Hospital in Orlando, we lost a patient for a period – we simply couldn’t find them. There was a search of the entire hospital. We eventually did find her but it wasn’t by brute force. It was by recognizing what she had come in for and guessing that she was on LSD. She was in a ladies room, staring into the mirror, studying herself through a sensory filter that she found mesmerizing. What she saw was something only she knows, but it’s safe to say it was a version of herself, distorted in a way only she would be able to explain.

Once upon a time as a Navy Corpsman in the former Naval Hospital in Orlando, we lost a patient for a period – we simply couldn’t find them. There was a search of the entire hospital. We eventually did find her but it wasn’t by brute force. It was by recognizing what she had come in for and guessing that she was on LSD. She was in a ladies room, staring into the mirror, studying herself through a sensory filter that she found mesmerizing. What she saw was something only she knows, but it’s safe to say it was a version of herself, distorted in a way only she would be able to explain. Then I came across this humorous meme. It ends up being

Then I came across this humorous meme. It ends up being  Every morning I set aside my morning coffee to travel the world through my mobile phone as if it were a spaceship, hermetically sealed. I peer through the window as I travel from point to point I find of interest that morning. One moment I’m checking on my friends in Ukraine inobtrusively, keenly aware that there’s more spin than the media frenzy of a hurricane hitting the United States.

Every morning I set aside my morning coffee to travel the world through my mobile phone as if it were a spaceship, hermetically sealed. I peer through the window as I travel from point to point I find of interest that morning. One moment I’m checking on my friends in Ukraine inobtrusively, keenly aware that there’s more spin than the media frenzy of a hurricane hitting the United States. The reality of our experiences is usually more like something to the right. Our focus is drawn by red dots and biases, and in the periphery other things are there, poorly defined. This example is purely visual. And because we generally like what we see, there’s generally a positive emotion with what we see that reinforces wanting to see it again.

The reality of our experiences is usually more like something to the right. Our focus is drawn by red dots and biases, and in the periphery other things are there, poorly defined. This example is purely visual. And because we generally like what we see, there’s generally a positive emotion with what we see that reinforces wanting to see it again. However we got here, here we are.

However we got here, here we are. When I first started programming, I did a lot of walking. A few months ago I checked the distance I walked every day just back and forth to school and it was about 3.5 km, not counting being sent to the store, or running errands. At the same time, we had this IBM System 36 and a PC Network at school where space was limited, time was limited, and you didn’t have much time to be productive on the computer so you better have it locked down.

When I first started programming, I did a lot of walking. A few months ago I checked the distance I walked every day just back and forth to school and it was about 3.5 km, not counting being sent to the store, or running errands. At the same time, we had this IBM System 36 and a PC Network at school where space was limited, time was limited, and you didn’t have much time to be productive on the computer so you better have it locked down. The article, “

The article, “