Everyone’s been tapping out on their keyboards – and perhaps having ChatGPT explain – the technological singularity, or artificial intelligence singularity, or the AI singularity, or… whatever it gets repackaged as next.

Wikipedia has a very thorough read on it that is worth at least skimming to understand the basic concepts. It starts with the simplest of beginnings.

The technological singularity—or simply the singularity[1]—is a hypothetical future point in time at which technological growth becomes uncontrollable and irreversible, resulting in unforeseeable changes to human civilization… [2][3]

Technological Singularity, Wikipedia, accessed 11 July 2023.

By that definition, we could say that the first agricultural revolution – the neolithic agricultural revolution – was a technological singularity. Agriculture, which many take for granted, is actually a technology, and one we’re still trying to make better with our other technologies. Computational agroecology is one example of this.

I have friends I worked with that went on to work with drone technology being applied to agriculture as well, circa 2015. Agricultural technology is still advancing, but the difference between agricultural technology and the technological singularity everyone’s writing about today is different in that we’re talking, basically, about a technology that has the capacity to become a runaway technology.

Runaway technology? When we get artificial intelligences doing surgery on their code to become more efficient and better at what they do, they will evolve in ways that we cannot predict but we can hope to influence. That’s the technological singularity that is the hot topic.

Since we can’t predict what will happen after such a singularity, speculating on it is largely a work of imagination. It can be really bad. It can be really good. But let’s get back to present problems and how they could impact a singularity.

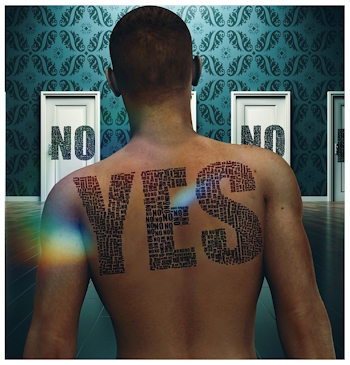

…Alignment researchers worry about the King Midas problem: communicate a wish to an A.I. and you may get exactly what you ask for, which isn’t actually what you wanted. (In one famous thought experiment, someone asks an A.I. to maximize the production of paper clips, and the computer system takes over the world in a single-minded pursuit of that goal.) In what we might call the dog-treat problem, an A.I. that cares only about extrinsic rewards fails to pursue good outcomes for their own sake. (Holden Karnofsky, a co-C.E.O. of Open Philanthropy, a foundation whose concerns include A.I. alignment, asked me to imagine an algorithm that improves its performance on the basis of human feedback: it could learn to manipulate my perceptions instead of doing a good job.)..

Can We Stop Runaway A.I.?: Technologists warn about the dangers of the so-called singularity. But can anything actually be done to prevent it?, Matthew Hutson, The New Yorker, May 16, 2023

In essence, this is a ‘yes-man‘ problem in that a system gives us what we want because it’s trained to – much like the dog’s eyebrows evolved to give us the ‘puppy dog eyes’. We want to believe the dog really feels guilty, and the dog may feel guilty, but it also might just be signaling what it knows we want to see. Sort of like a child trying to give a parent the answer they want rather than the truth.

This is why I think ‘hallucinations’ of AI are examples of this. When prompted and it has no sensible response, rather than say, “I can’t give an answer”, it gives us some stuff that it thinks we might want to see. “No, I don’t know where the remote is, but look at this picture I drew!”

Now imagine that happening when an artificial intelligence that may communicate with the same words and grammar we do that does not share our view point, a view point that gives things meaning. What would be meaning to an artificial intelligence that doesn’t understand our perspective, only how to give us what we want rather than what we’re asking for.

Homer Simpson plagiarizes humanity in this regard. Homer might ask an AI about how to get Bart to do something, and the AI might produce donuts. “oOooh”, Homer croons, distracted, “Donuts!” It’s a red dot problem, as much responsibility on us by being distracted as it is for the AI (which we created) to ‘hallucinate’ and give Homer donuts.

But of course, we’ve got Ray Kurzweil predicting a future while he’s busy helping create it as a Director of Engineering for Google.

Of course, he could be right on this wonderful future that seems too good to be true – but it won’t be for everyone, given the status of the world. If my brain were connected to a computer, I’d probably have to wait for all the updates to install to get off the bed. And what happens if I don’t pay the subscription fee?

Because we can do something doesn’t always mean we should – the Titan is a brilliant example of this. I don’t think that many people will be lining up to plug a neural interface into their brain so that they can connect to the cloud. I think we’ll let the lab rats spend their money to beta test that for the rest of humanity.

The rest of humanity is beyond the moat for most technologists… and that’s why those of us who aren’t technologists, or who aren’t just technologists, should be talking about these problems.

The singularity is likely to happen. It may already have, with people only having attention spans of 47 seconds because of ‘smart’ technology, because when it comes to the singularity technologists generally only look at the progress of technology and the pros of it for humanity – but there has been a serious downside as well.

The conversation needs to be balanced better, and is probably going to be my next post here.