There are some funny memes going around about TikTok and… Chinease spyware, or what have you. The New York Times had a few articles on TikTok last week that were interesting and yet… missed a key point that the memes do not.

Being afraid of Chinese Spyware while so many companies have been spying on their customers seems more of a bias than anything.

Certainly, India got rid of TikTok and has done better for it. Personally, I don’t like giving my information to anyone if I can help it, but these days it can’t be helped. Why is TikTok an issue in the United States?

It’s not too hard to speculate that it’s about lobbyism of American tech companies who lost the magic sauce for this generation. It’s also not hard to consider India’s reasoning about China being able to push their own agenda, particularly with violence on their shared borders.

Yet lobbying from the American tech companies is most likely, because they want your data and don’t want you to give it to China. They want to be able to sell you stuff based on what you’ve viewed, liked, posted, etc. So really, it’s not even about us.

It’s about the data that we give away daily when browsing social networks of any sort, websites, or even when you think you’re being anonymous using Google Chrome when in fact you’re still being tracked. The people who are advocating banning TikTok aren’t holding anyone else’s feet to the fire, instead using the ‘they will do stuff with your information’ when in fact we’ve had a lot of bad stuff happen with our information over the years.

Since 9/11, in particular, the US government has taken a pretty big interest in electronic trails, all in the interest in National Security, with the FBI showing up after the Boston Marathon bombing just because people were looking at pressure cookers.

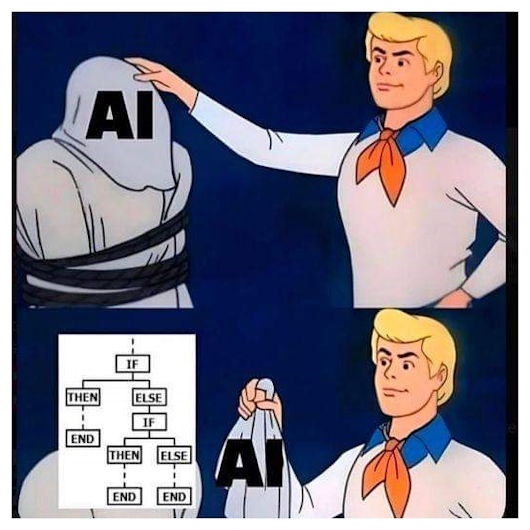

All of this information will get possibly get poured into learning models for artificial intelligences, too. Even WordPress.com volunteered people’s blogs rather than asked for volunteers.

What value do you get for that? They say you get better advertising, which is something that I boggle at. Have you ever heard anyone wish that they could see better advertising rather than less advertising?

They say you get the stuff you didn’t even know you wanted, and to a degree, that might be true, but the ability to just go browse things has become a lost art. Just about everything you see on the flat screen you’re looking at is because of an algorithm deciding for you what you should see. Thank you for visiting, I didn’t do that!

Even that system gets gamed. This past week I got a ‘account restriction’ from Facebook for reasons that were not explained other than instructions to go read the community standards because algorithms are deciding based on behaviors that Facebook can’t seem to explain. Things really took off with that during Covid, where even people I knew were spreading some wrong information because they didn’t know better and, sometimes, willfully didn’t want to know better or understand their own posts in a broader context.

Am I worried about TikTok? Nope. I don’t use it. If you do use TikTok, you should. But you should worry if you use any social network. It’s not as much about who is selling and reselling information about you as much as what they can do with it to control what you see.

Of course, most people on those platforms don’t see them for what they are, instead taking things at face value and not understanding the implications it has on choices they will have in the future that could range from advertising to content that one views.

China’s not our only problem.