Most of us live in a lot of different worlds, and we see things differently because of it. Some of us live in more than one world at a time. That’s why sometimes it’s hard for me to consider the promise of artificial intelligence and what we’re getting and the direction that’s going.

There’s space in this world in research for what we have now, which allows previously isolated knowledge to be regurgitated in a feat of math that makes the digital calculator look mundane. It’s statistics, it gives us what we want when we hit the ‘Enter’ button, and that’s not too bad.

Except it can replace an actual mind. Previously, if you read something, you didn’t guess if a machine threw the words together or not. You didn’t wonder if the teacher gave you a test generated by a large language model, and the teacher didn’t wonder if you didn’t generate the results the same way.

Now, we wonder. We wonder if we see an image. We wonder if we watch a video. We wonder enough so that the most popular female name for 2023 should be Alice.

So let me tell you where I think we should be heading with AI at this time.

What Could Be.

Everyone who is paying attention to what’s happening can see that the world is fairly volatile right now after the global pandemic, after a lot of economic issues that banks created combined with algorithmic trading… so this is the perfect time to drop some large language models in the world to make things better.

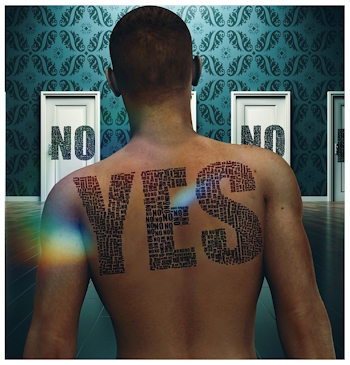

Nope.

No, it isn’t working that way. If we were focused on making the world better rather than worrying about using a good prompt for that term paper or blog post, it maybe could work that way. We could use things like ChatGPT to be consultants, but across mankind we lack the integrity to only use them as consultants.

“If anyone takes an AI system and starts designing speeches or messages, they generate the narrative that people want to hear. And the worst thing is that you don’t know that you are putting the noose around your neck alone.” The academic added that the way to this situation is education.

The only way to avoid manipulation is through knowledge. Without this, without information, without education, any human group is vulnerable, he concluded.1

“IA: implicaciones éticas más allá de una herramienta tecnológica“, Miguel Ángel Pérez Álvarez, Wired.com (Spanish), 29 Nov 2023.

There’s the problem. Education needs to adapt to artificial intelligence as well because this argument, which at the heart I believe to be true, does not suffer it’s own recursion because people don’t know when it’s ethically right to use it, or even don’t know that there should be ethics involved.

As it happens, I’m pretty sure Miguel Ángel Pérez Álvarez already understands this and simply had his thoughts truncated, as happens in articles. He’s also got me wondering how different languages are handled by these Large Language Models and how different their training models are.

It’s like finding someone using an image you created and telling them, “Hey, you’re using my stuff!” and they say, “But it was on the Internet”. Nevermind the people who believe that the Earth is flat, or who think that vaccinations give you better mobile connections.

AI doesn’t bother me. It’s people, it’s habits, and in a few decades they’ll put a bandaid on it and call it progress. The trouble is we have a stack of bandaids on top of each other at this point and we really need to look at this beyond the pulpits of some billionaires who enjoy more free speech than anyone else.

- actual quote: “Si cualquier persona toma un sistema de IA y se pone a diseñar discursos o mensajes, te generan la narrativa que la gente quiere escuchar. Y lo peor es que tú no sabes que te estás poniendo la soga al cuello solito”. El académico añadió que la manera de contrarrestar esta situación es la educación.

“La única manera de evitar la manipulación es a través del conocimiento. Sin este, sin información, sin educación, cualquier grupo humano es vulnerable”, finalizó.” ↩︎

One thought on “AI, Ethics, Us.”