I’ve been experimenting with uploading images to ChatGPT 4 and seeing what it has to say about them. To me, it’s interesting because I gain some insight into how far things have progressed, as well as how descriptive ChatGPT can be about things.

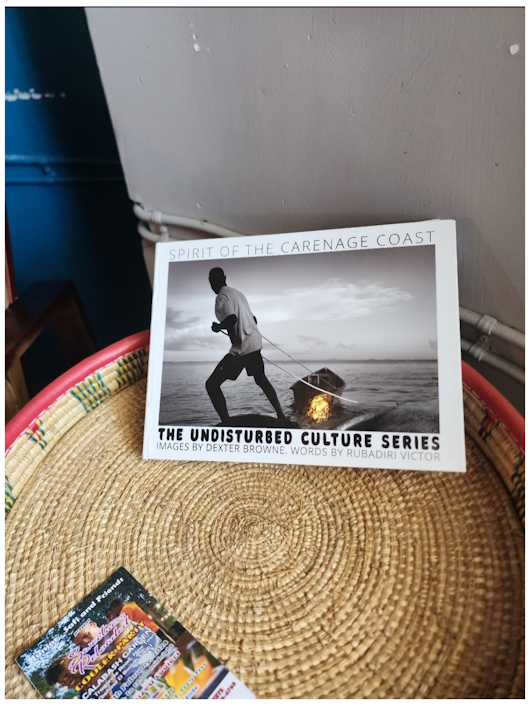

While having coffee yesterday with a friend, I was showing him the capabilities. He chose this scene.

He, like others I showed here in Trinidad and Tobago, couldn’t believe it. It’s a sort of magic for people. What I like when I use it for this is that it doesn’t look at the picture as a human would, where the subject is pretty obvious. It looks at all of the picture, which is worth exploring in a future post

He asked me how it could do that, give the details that it did in the next image in this post. I tried explaining it, and I caught that he was thinking of the classic “IF…THEN… ELSE” sequence that came from ‘classical’ computer science that we had been exposed to in the 1980s.

I tried and failed explaining it. I could tell I failed because he was frustrated with my explanation, and when I can’t explain something it bothers me.

We went our separate ways, and I went to a birthday party for an old friend. I didn’t get home til much later. With people driving as they do here in Trinidad, my mind was focused on avoiding them so I didn’t get to think on it as I would have liked.

I slept on it.

This morning I remembered something I had drawn up in my teens, and now I think I can explain it better to my friend, and perhaps even people curious about it. Hopefully when I send this to him he’ll understand, and since I’m spending the time doing just that, why not everyone else?

Identifying Objects.

As a teenager, my drawing on a sketch pad page was about getting a computer to identify objects. It included a camera connected to the computer, which wasn’t done commercially yet, and what one would do was rotate the object through all the axes and the computer would be told what the object was at every conceivable angle. It was just an idea of a young man passionate about the future with the beginnings of a grounding in personal computing.

What we’ve all been doing with social media for some time is tagging things. This is how we organized finding things, and the incentive was for people to find our content.

Someone would post something on social media, as I did with Flickr, and tag it appropriately (we would hope). I did have fun with it, tagging things like a bat in a photograph as being naked, which oddly was my most popular photo. Of course it was naked, you perverts.

However, I also tagged it as a bat. And if you search Flickr for a bat, you’ll come up with a lot of images of bats. They are of all different sorts of bats, of all angles. There are even more specific tags for kinds of bats, but overall we humans pretty much know a bat when we see one, so all those images of bats could then be added to a training model to allow a computer to come up with it’s own algorithmic way of identifying bats.

And it gets better.

The most popular versions of bats on Flickr, as an example, will be the ones that the most people liked. So now, the images of bats are given weight based on their popularity, and therefore could be seen as the best images of bats. Clearly, my picture of the bat in the bathtub shouldn’t be as popular a version.

It gets even better.

The more popular an image is, the more likely it is to be used on the Internet regardless of copyright, which means that it will show up in search engine rankings if you search for images of bats. Search Engine ranking then becomes another weight.

The more popular images that we collectively have chosen become the training models for bats. The system learns the pattern of the objects, much as we do but differently because they have different ways of looking at the same things.

If you take thousands – perhaps millions – of pictures of bats and train a system to identify it, it can go around looking for bats in images, going through all of the images available looking for bats. It will screw up sometimes, and you tell it, “Not a bat”. It also finds the bats that people haven’t tagged.

Given the amount of tagged images and even text on the Internet, doing it with specific things is a fairly straightforward process because we don’t do anything. We simply correct mistakes.

Now do that with all the tags of different objects. Eventually, you’ll get to where multiple images in a picture can be identified.

That’s basically how it works. I don’t know that they used Flickr.com, or search engines, but if I were doing it, that’s probably how I would – and it’s not a mistake that people have been encouraged to do this a lot more over the last years preceding artificial intelligence hitting the mainstream. Now look at who is developing artificial intelligences. Social networks and search engines.

The same thing applies to text.

Then, when you hand it an image with various objects in it, it identifies what it knows and describes them based on the words commonly associated with the objects, and if objects are grouped together, they become a higher level object. Milk and cookies is a great example.

And so it goes, stacking higher and higher a capacity to recognized patterns of patterns of patterns…

And this also explains how the revolution of Web x.0 may have carried the seeds of it’s own destruction.