This started off as a baseline post regarding generative artificial intelligence and it’s aspects and grew fairly long because even as I was writing it, information was coming out. It’s my intention to do a ’roundup’ like this highlighting different focuses as needed. Every bit of it is connected, but in social media postings things tend to be written of in silos. I’m attempting to integrate since the larger implications are hidden in these details, and will try to stay on top of it as things progress.

It’s long enough where it could have been several posts, but I wanted it all together at least once.

No AI was used in the writing, though some images have been generated by AI.

The two versions of artificial intelligence on the table right now – the marketed and the reality – have various problems that make it seem like we’re wrestling a mating orgy of cephalopods.

The marketing aspect is a constant distraction, feeding us what helps with stock prices and good will toward those implementing the generative AIs, while the real aspect of these generative AIs is not really being addressed in a cohesive way.

To simplify this, this post breaks it down into the Input, the Output, and the impacts on the ecosystem the generative AIs work in.

The Input.

There’s a lot that goes into these systems other than money and water. There’s the information used for the learning models, the hardware needed, and the algorithms used.

The Training Data.

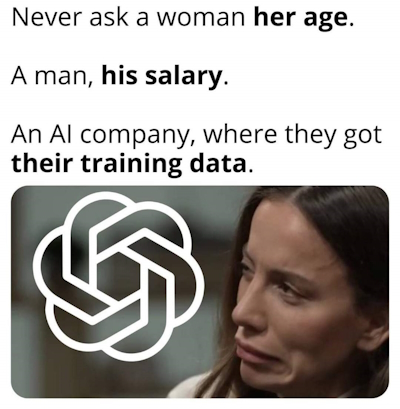

The focus so far has been on what goes into their training data, and that has been an issue including lawsuits, and less obviously, trust of the involved companies.

…The race to lead A.I. has become a desperate hunt for the digital data needed to advance the technology. To obtain that data, tech companies including OpenAI, Google and Meta have cut corners, ignored corporate policies and debated bending the law, according to an examination by The New York Times…

“How Tech Giants Cut Corners to Harvest Data for A.I.“, Cade Metz, Cecilia Kang, Sheera Frenkel, Stuart A. Thompson and Nico Grant, New York Times, April 6, 2024 1

Of note, too, is that Google has been indexing AI generated books, which is what is called ‘synthetic data’ and has been warned against, but is something that companies are planning for or even doing already, consciously and unconsciously.

Where some of these actions are questionably legal, they’re not as questionably ethical to some, thus the revolt mentioned last year against AI companies using content without permission. It’s of questionable effect because no one seems to have insight into what the training data consists of, and there seems no one is auditing them.

There’s a need for that audit, if only to allow for trust.

…Industry and audit leaders must break from the pack and embrace the emerging skills needed for AI oversight. Those that fail to address AI’s cascading advancements, flaws, and complexities of design will likely find their organizations facing legal, regulatory, and investor scrutiny for a failure to anticipate and address advanced data-driven controls and guidelines.

“Auditing AI: The emerging battlefield of transparency and assessment“, Mark Dangelo, Thomson Reuters, 25 Oct 2023.

While everyone is hunting down data, no one seems to be seriously working on oversight and audits, at least in a public way, though the United States is pushing for global regulations on artificial intelligence at the UN. The status of that hasn’t seemed to have been updated, even as artificial intelligence is being used to select targets in at least 2 wars right now (Ukraine and Gaza).

There’s an imbalance here that needs to be addressed. It would be sensible to have external auditing of learning data models and the sources, as well as the algorithms involved – and just get get a little ahead, also for the output. Of course, these sorts of things should be done with trading on stock markets as well, though that doesn’t seem to have made as much headway in all the time that has been happening either.

Some websites are trying to block AI crawlers, and it is an ongoing process. Blocking them requires knowing who they are and doesn’t guarantee bad actors might not stop by.

There is a new Bill that being pressed in the United States, the Generative AI Copyright Disclosure Act, that is worth keeping an eye on:

“…The California Democratic congressman Adam Schiff introduced the bill, the Generative AI Copyright Disclosure Act, which would require that AI companies submit any copyrighted works in their training datasets to the Register of Copyrights before releasing new generative AI systems, which create text, images, music or video in response to users’ prompts. The bill would need companies to file such documents at least 30 days before publicly debuting their AI tools, or face a financial penalty. Such datasets encompass billions of lines of text and images or millions of hours of music and movies…”

“New bill would force AI companies to reveal use of copyrighted art“, Nick Robins-Early, TheGuardian.com, April 9th, 2024.

Given how much information is used by these companies already from Web 2.0 forward, through social media websites such as Facebook and Instagram (Meta), Twitter, and even search engines and advertising tracking, it’s pretty obvious that this would be in the training data as well.

The Algorithms.

The algorithms for generative AI are pretty much trade secrets at this point, but one has to wonder at why so much data is needed to feed the training models when better algorithms could require less. Consider a well read person could answer some questions, even as a layperson, with less of a carbon footprint. We have no insight into the algorithms either, which makes it seem as though these companies are simply throwing more hardware and data at the problem than being more efficient with the data and hardware that they already took.

There’s not much news about that, and it’s unlikely that we’ll see any. It does seem like fuzzy logic is playing a role, but it’s difficult to say to what extent, and given the nature of fuzzy logic, it’s hard to say whether it’s implementation is as good as it should be.

The Hardware

Generative AI has brought about an AI chip race between Microsoft, Meta, Google, and Nvidia, which definitely leaves smaller companies that can’t afford to compete in that arena at a disadvantage so great that it could be seen as impossible, at least at present.

The future holds quantum computing, which could make all of the present efforts obsolete, but no one seems interested in waiting around for that to happen. Instead, it’s full speed ahead with NVIDIA presently dominating the market for hardware for these AI companies.

The Output.

One of the larger topics that has seemed to have faded is regarding what was called by some as ‘hallucinations’ by generative AI. Strategic deception was also something that was very prominent for a short period.

There is criticism that the algorithms are making the spread of false information faster, and the US Department of Justice is stepping up efforts to go after the misuse of generative AI. This is dangerous ground, since algorithms are being sent out to hunt products of other algorithms, and the crossfire between doesn’t care too much about civilians.2

The impact on education, as students use generative AI, education itself has been disrupted. It is being portrayed as an overall good, which may simply be an acceptance that it’s not going away. It’s interesting to consider that the AI companies have taken more content than students could possibly get or afford in the educational system, which is something worth exploring.

Given that ChatGPT is presently 82% more persuasive than humans, likely because it has been trained on persuasive works (Input; Training Data), and since most content on the internet is marketing either products, services or ideas, that was predictable. While it’s hard to say how much content being put into training data feeds on our confirmation biases, it’s fair to say that at least some of it is. Then there are the other biases that the training data inherits through omission or selective writing of history.

There are a lot of problems, clearly, and much of it can be traced back to the training data, which even on a good day is as imperfect as our own imperfections, it can magnify, distort, or even be consciously influenced by good or bad actors.

And that’s what leads us to the Big Picture.

The Big Picture

…For the past year, a political fight has been raging around the world, mostly in the shadows, over how — and whether — to control AI. This new digital Great Game is a long way from over. Whoever wins will cement their dominance over Western rules for an era-defining technology. Once these rules are set, they will be almost impossible to rewrite…

“Inside the shadowy global battle to tame the world’s most dangerous technology“, Mark Scott, Gian Volpicelli, Mohar Chatterjee, Vincent Manancourt, Clothilde Goujard and Brendan Bordelon, Politico.com, March 26th, 2024

What most people don’t realize is that the ‘game’ includes social media and the information it provides for training models, such as what is happening with TikTok in the United States now. There is a deeper battle, and just perusing content on social networks gives data to those building training models. Even WordPress.com, where this site is presently hosted, is selling data, though there is a way to unvolunteer one’s self.

Even the Fediverse is open to data being pulled for training models.

All of this, combined with the persuasiveness of generative AI that has given psychology pause, has democracies concerned about the influence. A recent example is Grok, Twitter X’s AI for paid subscribers, fell victim to what was clearly satire and caused a panic – which should also have us wondering about how we view intelligence.

…The headline available to Grok subscribers on Monday read, “Sun’s Odd Behavior: Experts Baffled.” And it went on to explain that the sun had been, “behaving unusually, sparking widespread concern and confusion among the general public.”…

“Elon Musk’s Grok Creates Bizarre Fake News About the Solar Eclipse Thanks to Jokes on X“, Matt Novak, Gizmodo, 8 April 2024

Of course, some levity is involved in that one whereas Grok posting that Iran had struck Tel Aviv (Israel) with missiles seems dangerous, particularly when posted to the front page of Twitter X. It shows the dangers of fake news with AI, deepening concerns related to social media and AI and should be making us ask the question about why billionaires involved in artificial intelligence wield the influence that they do. How much of that is generated? We have an idea how much it is lobbied for.

Meanwhile, Facebook has been spamming users and has been restricting accounts without demonstrating a cause. If there were a video tape in a Blockbuster on this, it would be titled, “Algorithms Gone Wild!”.

Journalism is also impacted by AI, though real journalists tend to be rigorous in their sources. Real newsrooms have rules, and while we don’t have that much insight into how AI is being used in newsrooms, it stands to reason that if a newsroom is to be a trusted source, they will go out of their way to make sure that they are: They have a vested interest in getting things right. This has not stopped some websites parading as trusted sources disseminating untrustworthy information because, even in Web 2.0 when the world had an opportunity to discuss such things at the World Summit on Information Society, the country with the largest web presence did not participate much, if at all, at a government level.

Then we have the thing that concerns the most people: their lives. Jon Stewart even did a Daily Show on it, which is worth watching, because people are worried about generative AI taking their jobs with good reason. Even as the Davids of AI3 square off for your market-share, layoffs have been happening in tech as they reposition for AI.

Meanwhile, AI is also apparently being used as a cover for some outsourcing:

Your automated cashier isn’t an AI, just someone in India. Amazon made headlines this week for rolling back its “Just Walk Out” checkout system, where customers could simply grab their in-store purchases and leave while a “generative AI” tallied up their receipt. As reported by The Information, however, the system wasn’t as automated as it seemed. Amazon merely relied on Indian workers reviewing store surveillance camera footage to produce an itemized list of purchases. Instead of saving money on cashiers or training better systems, costs escalated and the promise of a fully technical solution was even further away…

“Don’t Be Fooled: Much “AI” is Just Outsourcing, Redux“, Janet Vertesi, TechPolicy.com, Apr 4, 2024

Maybe AI is creating jobs in India by proxy. It’s easy to blame problems on AI, too, which is a larger problem because the world often looks for something to blame and having an automated scapegoat certainly muddies the waters.

And the waters of The Big Picture of AI are muddied indeed – perhaps partly by design. After all, those involved are making money, they have now even better tools to influence markets, populations, and you.

In a world that seems to be running a deficit when it comes to trust, the tools we’re creating seem to be increasing rather than decreasing that deficit at an exponential pace.

- The full article at the New York Times is worth expending one of your free articles, if you’re not a subscriber. It gets into a lot of specifics, and is really a treasure chest of a snapshot of what companies such as Google, Meta and OpenAI have been up to and have released as plans so far. ↩︎

- That’s not just a metaphor, as the Israeli use of Lavender (AI) has been outed recently. ↩︎

- Not the Goliaths. David was the one with newer technology: The sling. ↩︎