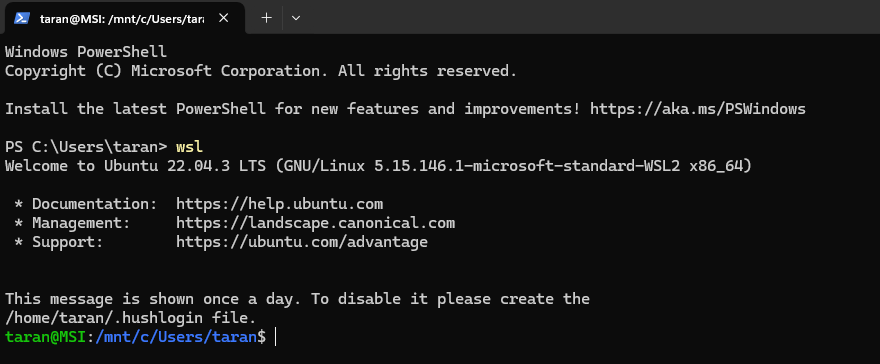

@knowprose.com who run a LLM on your computer while a SLM does almost the same but needs less performance?

Stefan Stranger

This was a great question, and I want to flesh it out some more because Small Language Models (SLMs) are less processor and memory intensive than Large Language Models (LLMs).

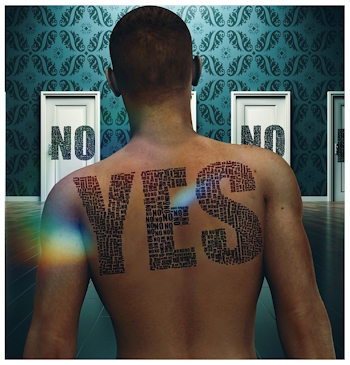

Small isn’t always bad, large isn’t always good. My choice to go with a LLM instead of a SLM is pretty much as I responded to Stefan, but I wanted to respond more thoroughly.

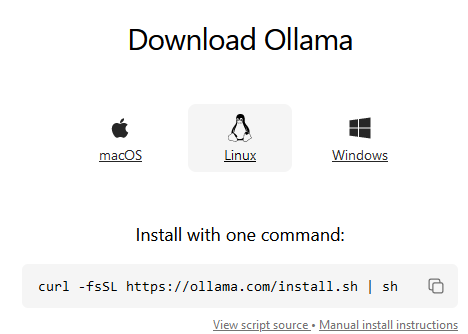

There is a good write up on the differences between LLMs and SLMs here that is recent at the time of this writing. It’s worth taking a look at if you’ve not heard of SLMs before.

Discovering My Use Cases.

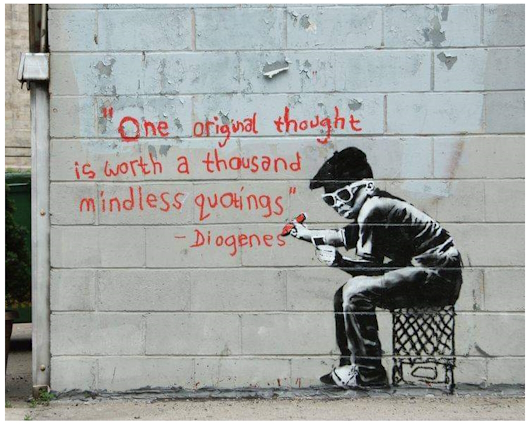

I have a few use cases that I know of, one of which is writing – not having the LLM do the writing (what a terrible idea), but to help me figure things out as I’m going along. I’m in what some would call Discovery, or in the elder schools of software engineering, requirements gathering. The truth is that, like most humans, I’m stumbling along to find what I actually need help with.

For example, yesterday I was deciding on the name of a character. Names are important and can be symbolic, and I tend to dive down rabbit-holes of etymology. Trying to discern the meanings of names is an arduous process, made no better by the many websites that have baby names that aren’t always accurate or culturally inclusive. My own name has different meanings in India and in old Celtic, as an example, but if you search for the name you find more of a Western bias.

Before you know it, I have not picked a name but instead am perusing something on Wikipedia. It’s a time sink.

So I kicked it around with Teslai (I let the LLM I’m fiddling with pick it’s name for giggles). It was imperfect, and in some ways it was downright terrible, but it kept me on task and I came up with a name in less than 15 minutes in what could have easily eaten up a day of my time as I indulged my thirst for knowledge.

How often do I need to do that? Not very often, but so far, a LLM seems to be better at these tasks.

I’ve also been tossing it things I wrote for it to critique. It called me on not using an active voice on some things, and that’s a fair assessment – but it’s also gotten things wrong when reading some of what I wrote. As an example, when it initially read “Red Dots Of Life“, it got it completely wrong – it thought it was about how red dots were metaphors for what was important, when in fact, the red dots were about other people driving you to distraction to get what they thought was important.

Could a SLM do these things? Because they are relatively new and not trained on as many things, it’s unlikely but possible. The point is not the examples, but the way I’m exploring my own needs. In that regard – and this could be unfair to SLMs – I opted to go with more mature LLMs, at least right now, until I figure out what I need from a language model.

Maybe I will use SLMs in the future. Maybe I should be using one now. I don’t know. I’m fumbling through this because I have eclectic interests that cause eclectic needs. I don’t know what I will throw at it next, but being allegedly better trained has me rolling with LLMs for now.

So far, it seems to be working out.

In an odd way, I’m learning more about myself through the use of the language model as well. It’s not telling me anything special, but it provokes introspection. That has value. People spend so much time being told what they need by marketers that they don’t necessarily know what they could use the technology for – which is why Fabric motivated me to get into all of this.

Now, the funny thing is that the basis of LLMs and their owner’s needs to add more information into them is not something I agree with. I do believe that better algorithms are needed so that they can learn with less information. I’ve been correcting a LLM as a human who has not been trained on as much information as it has been, so there is a solid premise for tweaking algorithms rather than shoving more information in.

In that regard, we should be looking at SLMs more, and demanding more of them – but what do we actually need from them? The marketers will tell you what they want to sell you, and you can sing their song, or you can go explore on your own – as I am doing.

Can you do it with a SLM? Probably. I simply made the choice to use a LLM, and I believe it suits me – but that’s just an opinion, and I could be wrong and acknowledge it. Sometimes you just pick a direction and go and hope you’re going in the right general direction.

What’s right for you? I can’t tell you, that would be presumptuous. You need to explore your own needs and make as an informed decision as I have.