“When the mob governs, man is ruled by ignorance; when the church governs, he is ruled by superstition; and when the state governs, he is ruled by fear. Before men can live together in harmony and understanding, ignorance must be transmuted into wisdom, superstition into an illumined faith, and fear into love.”

Manly P. Hall, The Secret Teachings of All Ages.

It’s almost impossible to keep up with all that is going on related to discussion on what’s being marketed as artificial intelligence, particularly with a lot of speculation on how it will impact our lives.

Since the late 1970s, we evolved technology from computers to personal computers to things we carry around that we still call ‘phones’ although their main purposes do not seem to revolve around voice contact. In that time, we’ve gone from having technology on a pedestal that few could reach to a pedestal most of humanity can reach.

It has been a strange journey so far. If we measure our progress by technology, we’ve been successful. That’s a lot like measuring your left foot with your right foot, though, assuming you are equally equipped. If we measure success fiscally and look at the economics of the world, a few people have gotten fairly rich at the expense of a lot of people. If we measure it in knowledge access, more people have access to knowledge than any other time on the planet – but it comes with a severe downside of a lot of misinformation out there.

We don’t really have a good measure of the impact of technology in our lives because we don’t seem to think that’s important outside of technology, yet we have had this odd tendency in my lifetime to measure progress with technology. At the end of my last session with my psychologist, she was talking about trying to go paperless in her office. She is not alone.

It’s 2023. Paperless offices was one of the technological promises made in the late 1980s. That spans about 3 decades. In that same period, it seems that the mob has increasingly governed, superstition has governed the mob, and the states have increasingly tried to govern. It seems as a whole, despite advances in science and technology, we, the mob, have become more ignorant, more superstitious and more fearful. What’s worse, our attention spans seem to have dropped to 47 seconds. Based on that, many people have already stopped reading because of ‘TLDR’.

Into all of this, we now have artificial intelligence to contend with:

…Some of the greatest minds in the field, such as Geoffrey Hinton, are speaking out against AI developments and calling for a pause in AI research. Earlier this week, Hinton left his AI work at Google, declaring that he was worried about misinformation, mass unemployment and future risks of a more destructive nature. Anecdotally, I know from talking to people working on the frontiers of AI, many other researchers are worried too…

HT Tech, “AI Experts Aren’t Always Right About AI“

Counter to all of this, we have a human population that clearly are better at multiplying than math. Most people around the world are caught up in their day to day lives, working toward some form of success even as we are inundated with marketing, biased opinions parading around as news, all through the same way we are now connected to the world.

In fact, it’s the price we pay, it’s the best price Web 2.0 could negotiate, and if we are honest we will acknowledge that at best it is less than perfect. The price we pay for it is deeper than the cost we originally thought and may even think now. We’re still paying it and we’re not quite sure what we bought.

“We are stuck with technology when what we really want is just stuff that works.”

Douglas Adams, The Salmon of Doubt.

In the late 1980s, boosts in productivity were sold to the public as ‘having more time for the things you love’ and variations on that theme, but that isn’t really what happened. Boosts in productivity came with the focus in corporations so that the more you did, the more you had to do. Speaking for myself, everyone hired for 40 hour work weeks but demanded closer to 50. Sometimes more.

Technology marketing hasn’t been that great at keeping promises. I write that as someone who survived as a software engineer with various companies over the decades. Like so many things in life, the minutiae multiplied.

“…Generative AI will end poverty, they tell us. It will cure all disease. It will solve climate change. It will make our jobs more meaningful and exciting. It will unleash lives of leisure and contemplation, helping us reclaim the humanity we have lost to late capitalist mechanization. It will end loneliness. It will make our governments rational and responsive. These, I fear, are the real AI hallucinations and we have all been hearing them on a loop ever since Chat GPT launched at the end of last year…”

Naomi Klein, “AI Machines Aren’t ‘Hallucinating’. But Their Makers Are“

There was a time when a software engineer had to go from collecting requirements to analysis to design to coding to testing to quality assurance to implementation. Now these are all done by teams. They may well all be done by versions of artificial intelligence in the future, but anyone who has dealt with clients first hand will tell you that clients are not that great at giving requirements, and that has been roled into development processes in various ways.

Then there is the media aspect, where we are all media tourists that are picking our social media adventures, creating our own narratives from what social media algorithms pick for us. In a lot of ways, we have an illusion of choice when what we really get are things that algorithms decide we want to see. That silent bias also includes content paywalled into oblivion, nevermind all that linguistic bias where we’re still discovering new biases.

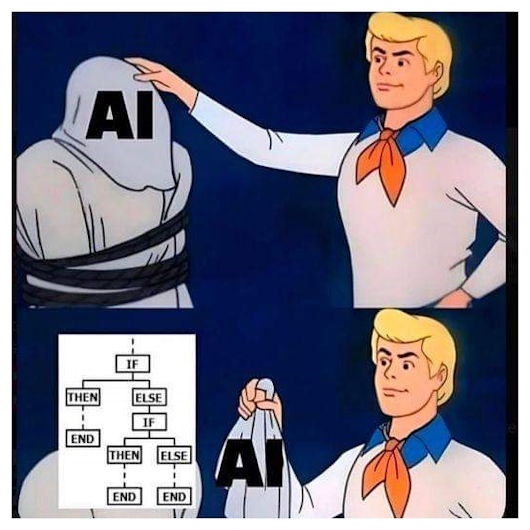

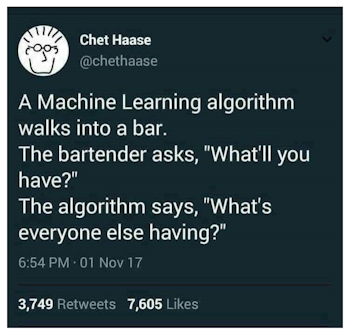

Large Language Models like ChatGPT, called artificial intelligences with a degree of accuracy, have access to information that may or may not be the same that we may have in our virtual caves. They ‘learn’ faster, communicate faster and perhaps more effectively, but they lack one thing that would make them fail a real Turing test: Being human.

This is not to say that they cannot fake it convincingly by using Bayesian probability to stew our biases into something we want to read. We shouldn’t be too surprised, we put stuff in, we get stuff out, and the stuff we get out will look amazingly like stuff we put in. It is a step above a refrigerator in that we put in ingredients and we get cooked meals out, but just because a meal tastes good doesn’t mean it’s nutritious.

“We’re always searching, but now we have the illusion we’re finding it.”

Dylan Moran, “Dylan Moran on sobriety, his childhood, and the internet | The Weekly | ABC TV + iview“

These stabs at humanity with technology are becoming increasingly impressive. Yet they are stabs, and potentially all that goes with stabs. The world limited to artificial intelligences can only make progress within the parameters and information that we give to them. They are limited, and they are as limited as we are, globally, biases and all. No real innovation happens beyond those parameters and information. It does not create new knowledge, it simply dresses up old knowledge in palatable ways very quickly, but what is palatable now may not be so next year. Or next month.

If we were dependent on artificial intelligences in the last century, we may not have had many of the discoveries we made. The key word, of course, is dependent. On the other hand, if we understood it’s limitations and incentivized humanity to add to this borgish collective of information, we may have made technological and scientific progress faster, but… would we have been able to keep up with it economically? Personally?

We’re there now. We’re busy listening to a lot of billionaires talk about artificial intelligences as if billionaires are vested in humanity. They’re not. We all know they’re not, some of us pretend they are. Their world view is very different. This does not mean that it’s wrong, but if we’re going to codify an artificial intelligence with opinions somehow, it seems we need more than billionaires and ‘experts’ in such conversations. I don’t know what the solution is, but I’m in good company.

The present systems we have are biased. It’s the nature of any system, and the first role of a sustainable system is making sure it can sustain itself. There are complicated issues related to intellectual property that can diminish new information being added to the pool balanced with economic systems that, in my opinion, should also be creating the possibility of a livelihood for those who do create and innovate not just in science and technology, but advance humanity in other ways.

I’m not sure what the answers are. I’m not even sure what the right questions are. I’m fairly certain the present large language models don’t have them because we have not had good questions and answers yet to problems affecting us as a species.

I do know that’s a conversation we should be having.

What do you think?

When I first started programming, I did a lot of walking. A few months ago I checked the distance I walked every day just back and forth to school and it was about 3.5 km, not counting being sent to the store, or running errands. At the same time, we had this IBM System 36 and a PC Network at school where space was limited, time was limited, and you didn’t have much time to be productive on the computer so you better have it locked down.

When I first started programming, I did a lot of walking. A few months ago I checked the distance I walked every day just back and forth to school and it was about 3.5 km, not counting being sent to the store, or running errands. At the same time, we had this IBM System 36 and a PC Network at school where space was limited, time was limited, and you didn’t have much time to be productive on the computer so you better have it locked down.