Everyone’s out to protect you online because everyone’s out to get you online. It’s a weird mix of people who want to use your data for profit and those who want to use your data for profit.

Planned obsolescence is something that has become ubiquitous in this technological age. It wasn’t always this way. Things used to be produced to last, not be replaced, and this is something to ponder before joining a line to get the latest iPhone, or when a software manufacturer shifts from a purchase model (where the license indicates you don’t really own the software sometimes!) to a subscription model.

The case has been made that software can’t be produced or maintained for free. The case has also been made, with less of a marketing department, that Free Software and Open Source software can do the same at a reduced cost. The negotiations are ongoing, but those who built their corporations from dumpster diving to read code printouts definitely have the upper hand.

Generally speaking, the average user doesn’t need complicated. In fact, the average user just wants a computer where they can browse the internet, write simple documents and spreadsheets. Corporations producing software on the scale of Microsoft, Google, Amazon, and so on don’t really care too much about what you need, they care about maintaining market share so that they can keep making money. Software has more features than the average user knows what to do with.

Where the business decisions are made, it’s about the bottom line. It’s oddly like something else we’re seeing a lot of lately. It seems unrelated, yet it’s pretty close to the same thing when you think about it.

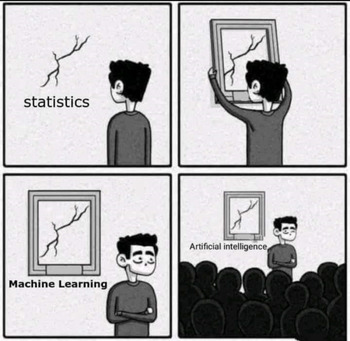

“…This is true of the cat detector, and it is true of GPT-4 — the difference is a matter of the length and complexity of the output. The AI cannot distinguish between a right and wrong answer — it only can make a prediction of how likely a series of words is to be accepted as correct. That is why it must be considered the world’s most comprehensively informed bullshitter rather than an authority on any subject. It doesn’t even know it’s bullshitting you — it has been trained to produce a response that statistically resembles a correct answer, and it will say anything to improve that resemblance...

…It’s the same reason AI can produce a Monet-like painting that isn’t a Monet — all that matters is it has all the characteristics that cause people to identify a piece of artwork as his. Today’s AI approximates factual responses the way it would approximate “Water Lilies.”…”

The Great Pretender, Devin Coldeway, TechCrunch, April 3, 2023.

Abstracted away, the large language models aren’t that different than business teams – except, maybe, business teams could actually care about their consumers, but instead rely on statistics – just like large language models do. It’s a lot like the representations of Happy, Strong and Tough that I wrote about with AI generated images. It’s an approximation based on what the models and algorithms are trained on – which is… us.

There could be a soul to the Enterprise, I suppose, but maybe the Enterprise needs to remember where it comes from.