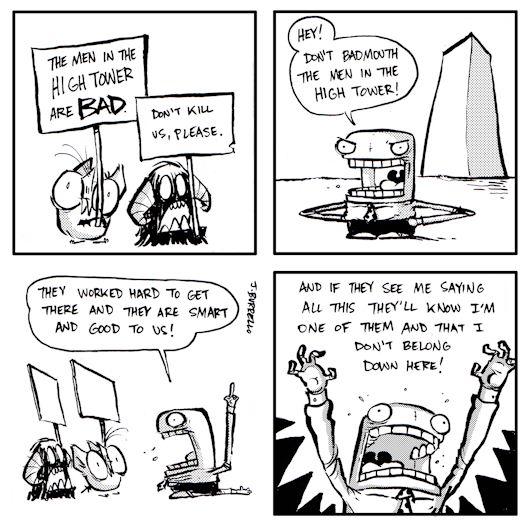

The last few days I’ve been doing some actual experimentation, initially begun because of Daniel Miessler’s Fabric, an Open Source Framework for using artificial intelligence to augment we lowly humans instead of the self-lauding tech bros whose business model falls to, “move fast and break things“.

It’s hard to trust people with that sort of business model when you understand your life is potentially one of those things, and you like that particular thing.

I have generative AIs on all of my machines at home now, which was not as difficult as people might think. I’m writing this part up because to impress upon someone how easy it was, I walked them through doing it in minutes over the phone on a Windows machine. I’ll write that up as my next post, since apparently it seems difficult to people.

For myself, the vision Daniel Miessler brought with his implementation, Fabric, is inspiring in it’s own way though I’m not convinced that AI can make anyone a better human. I think the idea of augmenting is good, and I think with all the infoglut I contend with leaning on a LLM makes sense in a world where everyone else is being sold on the idea of using one, and how to use it.

People who wax poetic about how an AI has changed their lives in good ways are simply waxy poets, as far as I can tell.

For me, with writing and other things I do, there can be value here and there – but I want control. I also don’t want to have to risk my own ideas and thoughts by uploading even a hint of them to someone else’s system. As a software engineer, I have seen loads of data given to companies by users, and I know what can be done with it, and I have seen how flexible ethics can be when it comes to share prices.

Why Installing Your Own LLM is a Good Idea. (Pros)

There are various reasons why, if you’re going to use a LLM, it’s a good idea to have it locally.

(1) Data Privacy and Security: If you’re an individual or a business, you should look after your data and security because nobody else really does, and some profit from your data and lack of security.

(2) Control and Customization: You can fine tune your LLM on your own data (without compromising your privacy and security). As an example, I can feed a LLM various things I’ve written and have it summarize where ideas I’ve written about connect – and even tell me if I have something published where my opinion has changed- without worrying about handing all of that information to someone else. I can tailor it myself – and that isn’t as hard as you think.

(3) Independence from subscription fees; lowered costs: The large companies will sell you as much as you can buy, and before you know it you’re stuck with subscriptions you don’t use. Also, since the technology market is full of companies that get bought out and license agreements changed, you avoid vendor lock-in.

(4) Operating offline; possible improved performance: With the LLM I’m working on, being unable to access the internet during an outage does not stop me from using it. What’s more, my prompts aren’t queued, or prioritized behind someone that pays more.

(5) Quick changes are quick changes: You can iterate faster, try something with your model, and if it doesn’t work, you can find out immediately. This is convenience, and cost-cutting.

(6) Integrate with other tools and systems: You can integrate your LLM with other stuff – as I intend to with Fabric.

(7) You’re not tied to one model. You can use different models with the same installation – and yes, there are lots of models.

The Cons of Using a LLM Locally.

(1) You don’t get to hear someone that sounds like Scarlett Johansson tell you about the picture you uploaded1.

(2) You’re responsible for the processing, memory and storage requirements of your LLM. This is surprisingly not as bad as you would think, but remember – backup, backup, backup.

(3) If you plan to deploy a LLM as a business model, it can get very complicated very quickly. In fact, I don’t know all the details, but that’s nowhere in my long term plans.

Deciding.

In my next post, I’ll write up how to easily install a LLM. I have one on my M1 Mac Mini, my Linux desktop and my Windows laptop. It’s amazingly easy, but going in it can seem very complicated.

What I would suggest about deciding is simply trying it, see how it works for you, or simply know that it’s possible and it will only get easier.

Oh, that quote by Diogenes at the top? No one seems to have a source. Nice thought, though a possible human hallucination.

- OK, that was a cheap shot, but I had to get it out of my system. ↩︎