I’ve been spending, like so many, an inordinate amount of time considering the future of what we accuse of being artificial intelligence, particularly since I’ve been focusing on my writing and suddenly we have people getting things written for them by ChatGPT. I’ll add that the present quality doesn’t disturb me as much as the reliance on it.

I’ve been spending, like so many, an inordinate amount of time considering the future of what we accuse of being artificial intelligence, particularly since I’ve been focusing on my writing and suddenly we have people getting things written for them by ChatGPT. I’ll add that the present quality doesn’t disturb me as much as the reliance on it.

Much of what these artificial intelligences pull from is on the Internet, and if you’ve spent much time on the Internet, you should be worried. It goes a bit beyond that if you think a bit ahead.

Imagine, if you would, artificial intelligences quoting artificial intelligences trained by artificial intelligences. It’s really not that far away and may have already begun as bloggers looking to capitalize on generating content quickly thrash their keyboards to provide prompts to ChatGPT and it’s ilk to create blog posts such that when they market their content it pops up in search engine results. Large language models (of which ChatGPT is one) suddenly think this is great content because what is repeated most makes predictive models say, “Aha! This must be what they mean or want!”.

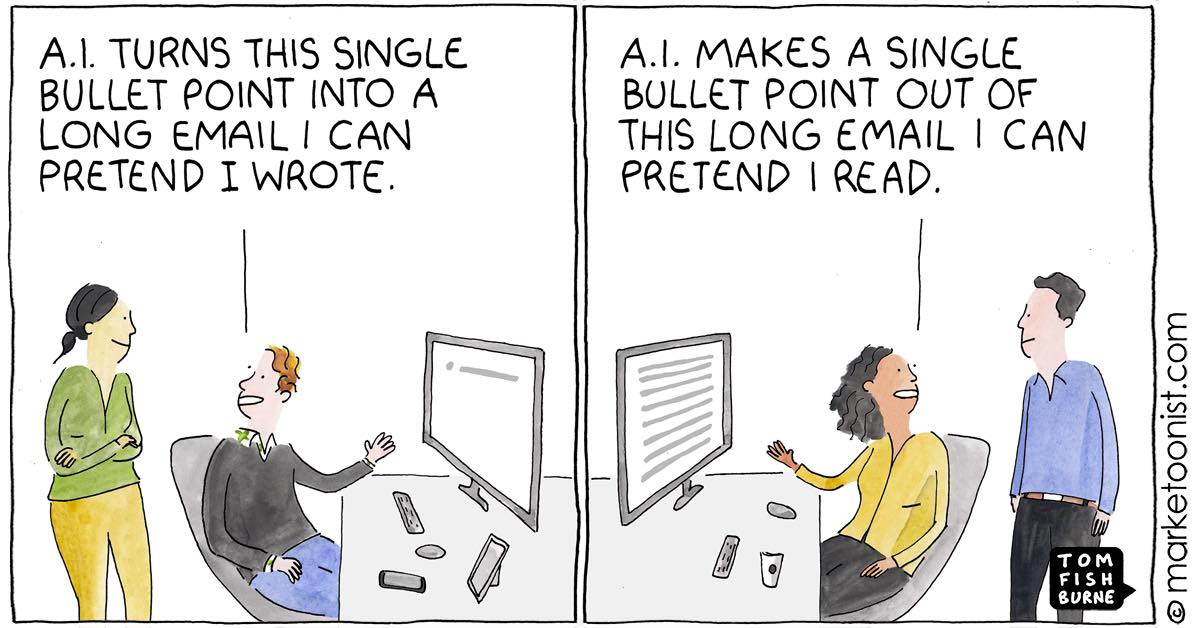

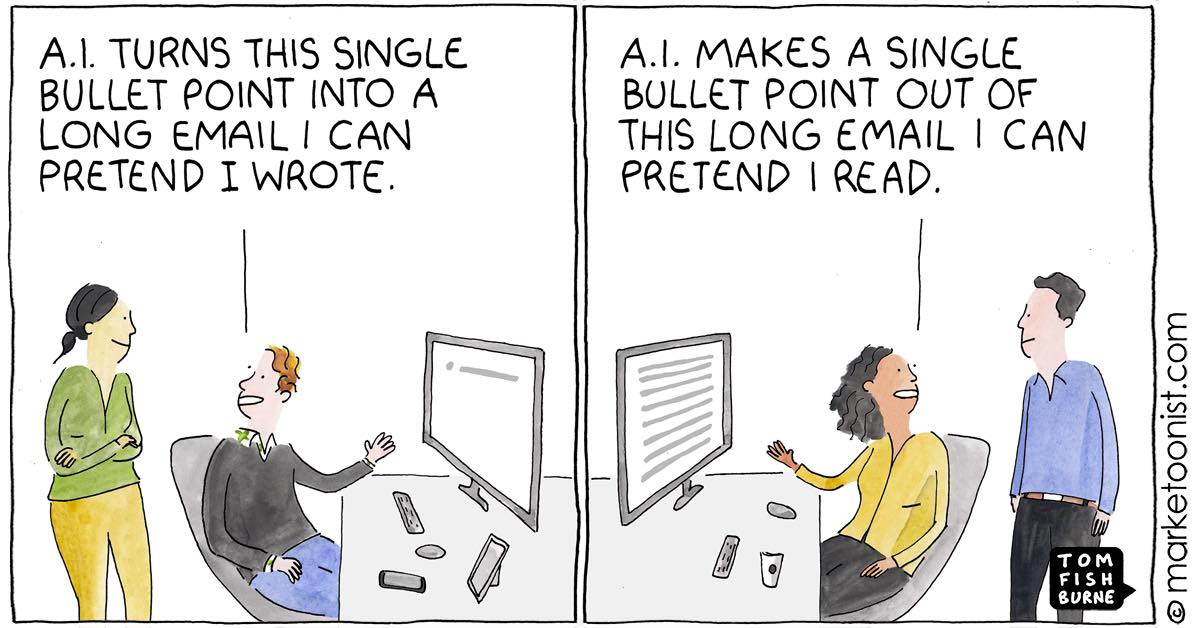

This cartoon at Marketoonist.com pokes at the problem with “AI Written, AI Read”, but not the recursive issue. Fortunately, on a personal blog I can use it otherwise it would take us down the path of copyright and the stable diffusion lawsuit.

This has the potential to be a scary future. People who look forward a few steps have started an open letter, which of course I signed, asking to Pause Giant AI Experiments. It’s a symbolic thing, I fully expect, because the people who are behind these artificial intelligences aren’t really too interested in much more than their bottom line, with the notable exception of DeepAI.org as far as I know.

Meanwhile, so many seem to think that these Large Language Models are a nice replacement for the nerds doing their homework because they don’t have to hang out with them. There’s a reason I bring this up. One of the reasons Artificial Intelligences, Large Language Model or otherwise, are considered creative is because they are basically well read people with mega-gobs of information at their digital synapses. They make it easier to find the intersectionality, a realm that has become more and more important and less and less thought about in our society where specializations require diplomas to do things that… one really doesn’t need a diploma to do.

And it doesn’t know everything. For example, I know a bit about Trinidad and Tobago, so I posed a question to ChatGPT related to the economy of Trinidad and Tobago (response in italics):

What are good exports that Trinidad and Tobago could focus on to help build the economy and better the socioeconomic status of it’s citizens?

Trinidad and Tobago has a diversified economy with a strong focus on the energy sector, particularly oil and gas production. However, there are several other sectors that the country could focus on to diversify its exports and boost its economy. Some potential export industries for Trinidad and Tobago include:

Tourism: Trinidad and Tobago has a rich cultural heritage, beautiful beaches, and unique natural attractions such as the Caroni Swamp and the Asa Wright Nature Centre. The country has the potential to further develop its tourism industry and attract visitors from around the world.

Agriculture: Trinidad and Tobago has a favorable climate for agriculture and could focus on exporting products such as cocoa, coffee, citrus fruits, and vegetables. The country could also explore the potential for organic farming and niche products such as spices and herbs.

Creative industries: Trinidad and Tobago has a vibrant cultural scene and could focus on exporting its music, film, and other creative products. The country has a rich tradition of Carnival, which could be further developed as a major tourist attraction and cultural export.

Information and communication technology (ICT): Trinidad and Tobago has a well-educated and tech-savvy workforce, and the country could focus on developing its ICT sector and exporting software, IT services, and other digital products.

Manufacturing: Trinidad and Tobago could focus on developing its manufacturing industry and exporting products such as chemicals, plastics, and processed foods.

Of course, developing any of these industries would require significant investment and effort, and there may be other factors to consider such as infrastructure, labor costs, and market demand. However, focusing on these industries could help diversify the economy and provide new opportunities for the citizens of Trinidad and Tobago.

Sounds very good, right? Devil is in the details.

Tourism is a definite possibility, but the decades long crime situation (which myself and others believe is because of socioeconomics related to the lack of diversity in the economy), as well as flash flooding and a focus on marketing rather than quality… no. I do like that it mentioned the Asa Wright center, and if anyone actually does come down this way, I can happily point you to other places that you won’t find in the tourist brochures.

Agricultural land has been used by the the government to build housing, so arable land use is decreasing with every the Housing Development Corporation creates, as well as with every agricultural plot of land converted to residential, commercial or industrial depending on who greases the wheels.

Manufacturing would be brilliant. Very little is made in Trinidad and Tobago, but if you’re going to be competing with robots and artificial intelligences in the developed world, we can throw that out.

ICT is my personal favorite, coming from a chatbot that has already got people generating code with it. Seriously, ChatGPT?

Everything ChatGPT has presented has been said more than once in the context of diversifying the economy of Trinidad and Tobago, and it’s a deep topic that most people only understand in a very cursory way. The best way to judge an economy is to observe it over time. In the grand scale of global discourse, the estimated population of 1.5 million people in a dual island nation is not as interesting to the rest of the world as Trinbagonians would like to think it is – like any other nation, most people think it’s the center of the universe – but it’s not a big market, for opportunities young intelligent people leave as soon as they can (brain drain), and what we are left with aspires to mediocrity while hiring friends over competency. A bit harsh, but a fair estimation in my opinion.

How did ChatGPT come up with this? With data it could access, and in that regard since it’s a infinitesimal slice of the global interest, not much content is generated about it other than government press releases by politicians who want to be re-elected so that they can keep their positions, a situation endemic to any democracy that elects politicians, but in Trinidad and Tobago, there are no maximum terms for some reason. A friend sailing through the Caribbean mentioned how hard it was to depart an island in the Caribbean, and I responded with, “Welcome to the Caribbean, where every European colonial bureaucracy has been perpetuated into stagnancy.“

The limitations using Trinidad and Tobago as a test case, an outlier of data in the global information database that we call the internet, can be pretty revealing in that there is a bias it doesn’t know about because the data it feeds on is in itself biased, and unlikely to change.

But It’s Not All Bad.

I love the idea that these large language models can help us find the intersectionality between specialties. Much of the decades of my life have been spent doing just that. I read all sorts of things, and much of what I have done in my lifetime has been cross referencing ideas from different specialties that I have read up on. I solved a memory issue in a program on the Microsoft Windows operating system by pondering Costa Rican addresses over lunch one day. Intersectionality is where many things wander off to die these days.

Sir Isaac Newton pulled from intersection. One biography describes him as a multilingual alchemist, whose notes were done in multiple languages which, one must consider, is probably a reflection of his internal dialogue. He didn’t really discover gravity – people knew things fell down well before him, I’m certain – but he was able to pull from various sources and come up with a theory that he could publish, something he became famous for, and something in academia that he was infamous for with respect to the politics of academia.

J.R.R Tolkien, who has recently had a great movie done on his life, was a linguist who was able to pull from many different cultures to put together fiction that has transcended beyond his death. His book, “The Hobbit”, and the later trilogy of “The Lord of the Rings” have inspired various genres of fantasy fiction, board games and much more.

These two examples show how pulling from multiple cultures and languages, and specialties, are historically significant. Large Language Models are much the same.

Yet there are practical things to consider. Copyrights. Patents. Whether they are legal entities or not. The implicit biases on what they are fed, with the old software engineering ‘GIGO’ (Garbage in, garbage out) coming to mind with the potential for irrevocable recursion of supercharging that garbage and spewing it out to the silly humans who, as we have seen over the last decades, will believe anything. Our technology and marketing of it are well beyond what most people can comprehend.

We are sleeping, and our dreams of electric sheep come with an invisible electric fence with the capacity to thin the herd significantly.

Most of the people around me are completely unaware of the

Most of the people around me are completely unaware of the  Once upon a time as a Navy Corpsman in the former Naval Hospital in Orlando, we lost a patient for a period – we simply couldn’t find them. There was a search of the entire hospital. We eventually did find her but it wasn’t by brute force. It was by recognizing what she had come in for and guessing that she was on LSD. She was in a ladies room, staring into the mirror, studying herself through a sensory filter that she found mesmerizing. What she saw was something only she knows, but it’s safe to say it was a version of herself, distorted in a way only she would be able to explain.

Once upon a time as a Navy Corpsman in the former Naval Hospital in Orlando, we lost a patient for a period – we simply couldn’t find them. There was a search of the entire hospital. We eventually did find her but it wasn’t by brute force. It was by recognizing what she had come in for and guessing that she was on LSD. She was in a ladies room, staring into the mirror, studying herself through a sensory filter that she found mesmerizing. What she saw was something only she knows, but it’s safe to say it was a version of herself, distorted in a way only she would be able to explain. Then I came across this humorous meme. It ends up being

Then I came across this humorous meme. It ends up being  I’ve been spending, like so many, an inordinate amount of time considering the future of what we accuse of being artificial intelligence, particularly since I’ve been focusing on my writing and suddenly we have people getting things written for them by ChatGPT. I’ll add that the present quality doesn’t disturb me as much as the reliance on it.

I’ve been spending, like so many, an inordinate amount of time considering the future of what we accuse of being artificial intelligence, particularly since I’ve been focusing on my writing and suddenly we have people getting things written for them by ChatGPT. I’ll add that the present quality doesn’t disturb me as much as the reliance on it.