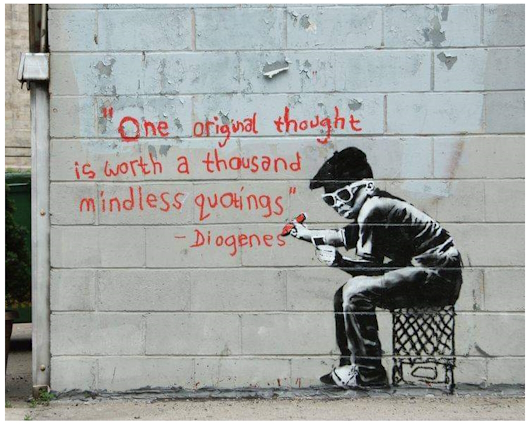

There was a time before social media and social networks as we know them, where people would talk to each other in person, isolated by geography. Then we figured out how to send our writing, and there was a period when pen-pals and postcards were important. News organizations adopted technology faster as reports came in from an ever increasing geography until, finally, we ran out of geography.

Social media has become an integral part of our lives, connecting us to friends, families, communities, and global events in ways that were unimaginable just a few decades ago. Yet, as platforms like Facebook, Instagram, and Twitter (now X) dominate our digital landscape, serious questions arise about privacy, control, and freedom. Who owns our data? How are algorithms shaping our perceptions? Are we truly free in these spaces? Are we instead slaves to the algorithms?

It’s time to rethink social media. Enter decentralization and the Fediverse—a revolutionary approach to online networking that prioritizes freedom, community, and individual ownership.

The Problem with Centralized Social Media And Networks

At their core, mainstream social media platforms operate on a centralized model. They are controlled by corporations with one primary goal: profit. This model creates several challenges:

- Privacy Violations: Your data – likes, shares, private messages—becomes a commodity, sold to advertisers and third parties.

- Algorithmic Control: Centralized platforms decide what you see, often prioritizing sensational or divisive content to keep you engaged longer.

- Censorship: Content moderation decisions are made by corporations, leading to debates about free speech and fair enforcement of rules.

- Monopolization: A handful of companies dominate the space, stifling innovation and giving users little choice.

All of this comes to the fore with the recent issues in the United States surrounding Tik-Tok, which Jon Oliver recently mentioned on his show and which I mentioned here on KnowProSE.com prior. The same reasons that they want to ban TikTok are largely the same things other social networks already do – it’s just who they do it for or could potentially do it for. Yes, they are as guilty as any other social network of the same problems above.

These are real issues, too, related to who owns what regarding… you. They often leave you looking at the same kind of content and drag you down a rabbit hole while simply supporting your biases, and should you step out of line, you might find your reach limited or in some cases completely taken away. These issues have left many users feeling trapped, frustrated, and disillusioned.

Recently, there has been a reported mass exodus from one controlled network to another – from Twitter to BlueSky.

There’s a better way.

What Is the Fediverse?

The Fediverse (short for “federated universe”) is a network of interconnected, decentralized platforms that communicate using open standards. Unlike traditional social media, the Fediverse is not controlled by a single entity. Instead, it consists of independently operated servers—called “instances”—that can interact with each other.

Popular platforms within the Fediverse include:

- Mastodon: A decentralized alternative to Twitter.

- Pixelfed: An Instagram-like platform for sharing photos.

- Peertube: A decentralized video-sharing platform.

- WriteFreely: A blogging platform with a focus on minimalism and privacy.

These platforms empower users by giving them control over their data, their communities, and their online experiences.

Why Decentralization Matters

- Data Ownership: In the Fediverse, your data stays under your control. Each server is independently operated, and many prioritize privacy and transparency.

- Freedom of Choice: You can choose or create a server that aligns with your values. If you don’t like one instance, you can switch to another without losing your connections.

- Resilience Against Censorship: No single entity has the power to shut down the entire network.

- Community-Centric: Instead of being shaped by algorithms, communities in the Fediverse are human-driven and often self-moderated.

How You Can Join the Movement

- Explore Fediverse Platforms: Start by creating an account on Mastodon or another Fediverse platform. Many websites like joinmastodon.org can help you find the right instance.

- Support Decentralization: Advocate for open standards and decentralized technologies in your circles.

- Educate Others: Share the benefits of decentralization with your friends and family. Help them see that alternatives exist.

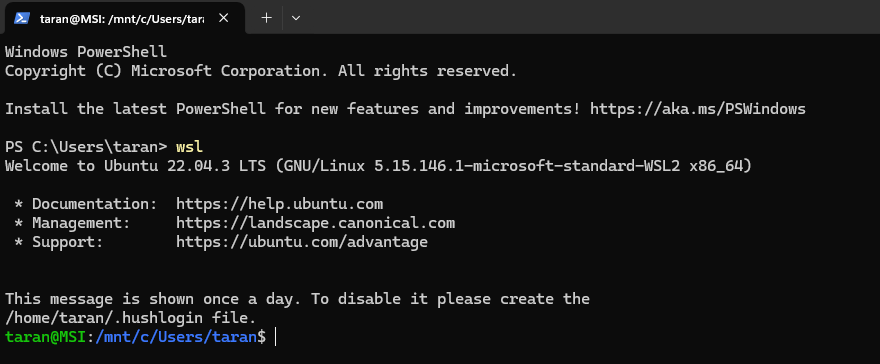

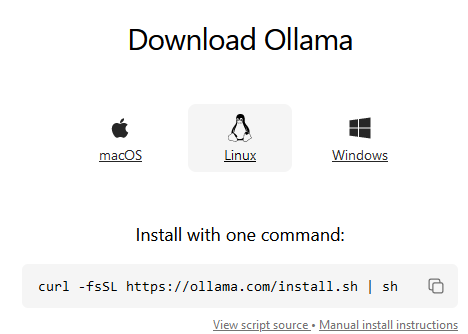

- Contribute to the Ecosystem: If you’re tech-savvy, consider hosting your own instance or contributing to open-source projects within the Fediverse.

The Call to Action

Social media doesn’t have to be controlled by a handful of tech giants. The Fediverse represents a vision for a better internet—one that values privacy, freedom, and genuine community. By choosing decentralized platforms, you’re taking a stand for a more equitable digital future.

So, what are you waiting for? Explore the Fediverse, join the conversation, and help build a social media landscape that works for everyone, not just the corporations.

Take the first step today. Decentralize your social media life and reclaim your digital freedom!