In my last post here, I said that the true value of the Anki Vector to me would be determined by the Software Development Kit (SDK), which wasn’t yet released.

I am a bit disappointed that no one at Anki answered my tweet on it to date – and so I used a Douglas Adams reference about hiding things when I tweeted them again.

A fair criticism of Anki is that they aren’t very good at organizing the information and updating customers when they’re doing pretty good things. Frankly, the beginning novelty of Vector and it’s potential is what seems to be allowing them not to pay as much for this faux pas. And too, I suspect, the project has grown faster than the company has – a testament to engineering. It has apparently sold well, a testament to their marketing. Yet when it comes to information on the product, it seems pretty hard to come by information users/expected are expected to have.

Installing the Vector SDK

I found the Vector SDK Alpha release note through an Anki blog entry not as easily found as I would have liked. Within it you’ll find the link to the SDK documentation, and within that you’ll find the actual downloads. I found this through force of will, largely because Vector was sitting impatiently on his charger for almost a week making R2D2-ish sounds while giving me the baleful look of Wall-E when I walked by.

It’s amazing how those eyes are really the center of how we see Vector.

I installed the Alpha SDK, and I configured Vector – which involves getting the IP address of Vector. It’s not available through the app on the phone, and there’s a trick to it (in case you’re looking for it yourself) – you have to tap Vector’s top button twice, then raise and lower his arm. Vetor’s IP address will then be shown where his eyes are. To get back to normal operation, raise and lower Vector’s arm again. Sacrificing a chicken is optional. Be careful with blood spatter; Vector is not fluid-proof.

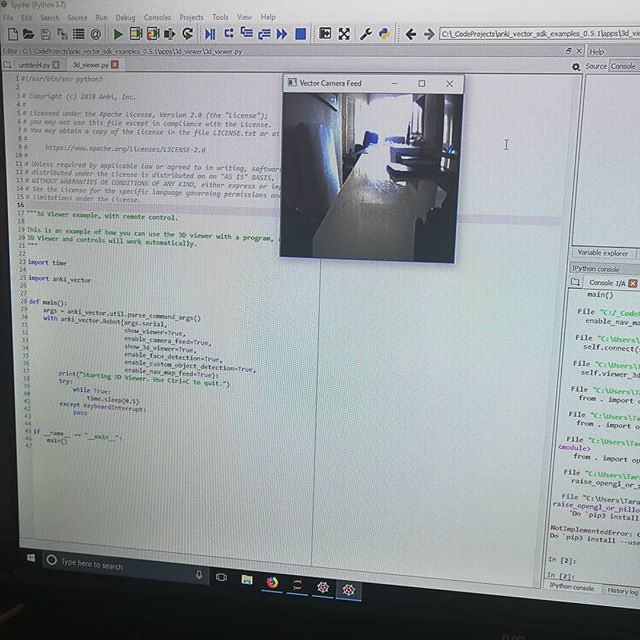

After that, it was a simple matter of firing Spyder up – part of the Anaconda data science platform for Python, but available standalone – and ran some of the example code, tweaking it here and there to get a feel for the capabilities of the Vector SDK Alpha.

This is where they shine – when it comes to sharing the code. And the SDK documentation itself, so far, is pretty good.

The Reality of the SDK.

I think I was expecting a bit more from the SDK, which is my fault and I acknowledge that. I had expected more in the way of interacting with the cloud itself – for example, renaming Vector’s wake phrase/word, or allowing behavior change during normal operation. That’s presently not there, which effectively gives Vector a multiple personality disorder – with blackouts where, for better and worse, the SDK allows the hijacking of Vector.

Imagine waking up and not knowing how you got somewhere, what you just did, and where that eyebrow went. That’s a fair anthropomorphization.

The SDK works through your wireless connection – the code/application has to be running on the same network as Vector, and your specific machine gets a certificate to run the code on Vector – a good security precaution or people would be hacking Vectors and checking out other people’s places.

It’s bad enough with the Alexa integration – I had an Alexa when they first came out but had enough creepy incidents with Amazon to get rid of mine. Still, the world of Amazonians wants it and it’s a good selling point for Anki, so I get it. That seems to be done well enough to please those that wanted it, so maybe they’ll focus on things other than that now.

In all, I’d like to transfer a version of what they have in the cloud into my personal systems and allow me to tinker with that as well.

Still, given what I have been playing with related to machine learning and natural language processing – it’s no mistake that I had the Anaconda distribution of Python installed already – I’m having a bit of fun playing with the SDK and testing the limitations of the hardware.

The video from the Vector hardware platforms is good enough for some basic things, but lighting really does affect it. This is a limitation in it’s exploration, and it limits it’s facial recognition ability (the one thing I’ve found you can access from the cloud in a limited way).

I’ve been considering a polarizing film over the cameras for better images, and have even considered mounting a light source on Vector for darkness, which would have the misfortune of not being able to be controlled through Vector (but it could be controlled independently through code). I plan to play with the lights part of the SDK to see what I can get away with.

You don’t get to fiddle with facial recognition code, but there’s Python code for that – such as PyPi face_recognition.

The events ability does allow for more reactive things.

Making Vector use profanity is a must, if only once.

There are error codes that aren’t documented – I had the 915 error twice on Vector while I was writing this, and all I found was on Reddit. Without error codes, we don’t get error trapping with Vector – and that’s a problem that I hope they address in the Beta.

Overall – I’m happier with the SDK, which shows promise and a bit of effort on the part of Anki. The criticisms I have so far are of an Alpha SDK – which means that this will change in time.

They do need to get a bit better at the responsiveness, though – something I suspect that they are already aware of. To enjoy this level of success comes with painful growth. If only that were an engineering problem to solve.