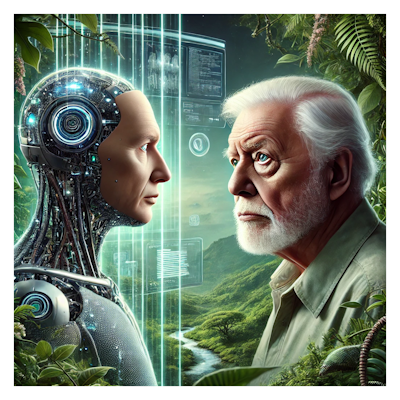

Generative AI is allowing people to do all sorts of things, including imitating voices we have come to respect and trust over the years. In the most recent case of Sir David Attenborough, he greatly objects to it and finds it ‘profoundly disturbing’.

His voice is being used in all manner of ways.

It wasn’t long ago that Scarlet Johannson suffered such an insult that was quickly ‘disappeared’.

The difference here is that a man who has spent decades showing people the natural world has his voice being used in disingenuous ways, and it should give us all pause. I use generative artificial intelligence, as do many others, but there would be no way that I would even consider misrepresenting what I write or work on in the voice of someone else.

Who would do that? Why? It dilutes it. Sure, it can be funny to have a narration by someone like Sir David Attenborough, or Morgan Friedman, or… all manner of people… but to trot out their voices to misrepresent truth is a very grey area in an era of half-truths and outright lies being distributed on the Babel of the Internet.

Somewhere – I believe it was in Lessig’s ‘Free Culture’ – I had read that the UK allowed artists to control how their works were used. A quick search turned this up:

The Copyright, Designs and Patents Act 1988, is the current UK copyright law. It gives the creators of literary, dramatic, musical and artistic works the right to control the ways in which their material may be used. The rights cover: Broadcast and public performance, copying, adapting, issuing, renting and lending copies to the public. In many cases, the creator will also have the right to be identified as the author and to object to distortions of his work.

The UK Copyright Service

It would seem that something similar would have to be done with the voices and even appearance of people around the world – yet in an age moving toward artificial intelligence, where content has been scraped without permission, the only people who can actually stop doing this are the ones who are scraping the content.

The world of trusted humans is being diluted by untrustworthy humans.