While the information economy has thrived for a distinct few, we’re now in an age of context. It’s hard to explain this to people around me sometimes because many still were blissfully unaware that information was the new oil from the late 1990s.

Consider that there hasn’t been as much uproar as one would expect in Trinidad and Tobago over the TSTT data breach, where scanned copies of identification and other documents. A few people I’ve interacted with have been laissez-faire about the whole thing not because there’s nothing that they can do about it1 but because they themselves don’t see the value of the information.

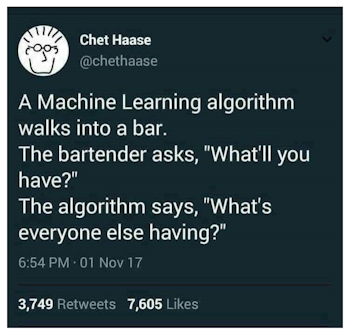

Social networks have capitalized on this. I still have family members and friends who do those wonky quizzes on Facebook that allow other parties access to information from their Facebook account. ‘X’, still better known as Twitter, also does the same. Data in large quantities of vast numbers of people and their interactions becomes information. In turn that information requires context, which I have written about before, even in the context of AI and synthetic recursion.

We start with data, we process within a context to provide information, and an artificial intelligence is then fed the information, or data, to create results for a user.

This, now, is being fed into AI. It seems harmless to many people.

John Hagel recently wrote about what is missing in artificial intelligence. He brings up some very important points, such as the trust in large global organizations diminishing and that impact on how much information people will knowingly share.

The context I provided above with one of the data breaches in Trinidad and Tobago doesn’t seem to jive with that on the surface, but it does. I can stand in a retailer line and listen to people of all political stripes income levels complain about corruption in government. There may not be agreement on who or what the problem is, but there seems consensus that the government isn’t something that they trust.

It’s the same in the United States, and just about everywhere I have been or have heard from.

…People will increasingly embrace technology tools that can help them be much more selective in providing access to their data. This continues to be a big opportunity for a new kind of business that I called “infomediaries” – businesses that will become trusted advisors and manage our data on our behalf (I wrote about this in my book, Net Worth)…

“What’s Missing in Artificial Intelligence?“, John Hagel, JohnHagel.com, Nov 27th 2023.

His affiliate links remain, not mine.

This is where it gets interested. If people slow the flow of their personal information into social networks, social media, apps, etc, the information is supposed to become stale and dated, like that print version of the Encyclopedia Brittanica some of us grew up with. This is where John points out something important.

Explicit knowledge is knowledge that we can express and communicate in words. Tacit knowledge is knowledge that is embodied in our actions, but that we would find very challenging to express. It’s about knowledge that we acquire when dealing with real-life situations and seeking to find ways to have increasing impact. Some tacit knowledge is long-lasting – it involves mastering enduring skills and practices and cannot be acquired by reading books or listening to lectures. Those who have mastered these skills and practices find it very hard to explain everything they do.

Here’s the challenge – in a rapidly changing world, tacit knowledge increases in proportion to explicit knowledge.

“What’s Missing in Artificial Intelligence?“, John Hagel, JohnHagel.com, Nov 27th 2023.

This is where context comes in.

When you interact with some well designed software that is actually trying to help you, one of the things that software engineers look for is the user intention. The user intention exists in a context, and the combination of the intention and context can allow for better results if done properly.

To make the point, I’ll quote an article that could itself be biased, but provides a context. I won’t say it’s a good or bad context, I’ll let readers decide.

…We turn now to look at a stunning new exposé on how Israel is using artificial intelligence to draw up targets and how Israel has loosened its constraints on attacks that could kill civilians. One former intelligence officer says Israel has developed a, quote, “mass assassination factory.”…

“Israel Is Using Artificial Intelligence to Generate Military Targets in Gaza“, Amy Goodman, Truthout, Dec 1st, 2023.

I’m not going to try to navigate the Israeli-Palestinian conflict here, I just offered an example of a context which information can be used.

The context for the Israelis, if this is all true, is picking targets, and the intent would be to minimize what we call collateral damage. The stakes are high.

The articles accuse Israel of weakening their intention with their artificial intelligence that is selecting targets, which is an example of widening or broadening results based on intention in the user’s (Israel’s) context. They could tighten it for less casualties as well, but in doing so may not be able to hit targets that might be fighting with them.

That this is being automated at all is probably at best a little disturbing. Is it true? I don’t know. I recognize biases, and as John pointed out, trust is an issue.

Is it fair to use this as an example? I think it is, whether true or not, because it also points out some of the stakes we might be talking about with artificial intelligence. Context matters.

In theory, I could agree with this sort of use of artificial intelligence to defend civilians. If you look around throughout the media, though, you’ll find the entire Israeli/Palestinian issue to be more divisive than most other things and therefore contexts vary. Intentions vary. I can honestly say that though I know much about it I do not know enough to write about it. It is not my story to write.

In that regard, it’s a perfect example because we can also see how contexts and intentions can shift over time, and not everyone has the same contexts. Intentions shift over time, and not everyone has the same intentions.2

That example goes a bit beyond whether when asking an AI to generate an image of a person and the result is a person of European descent. This is despite the fact that people of European descent constituting 16% of the global population, which probably has 84% of the global population wondering why they have to type in specifics all the time. There’s lots of bias, as I have written about before.

Populations change. At one point, it was said that people of European ancestry constituted 38% of the global population, but during that period the available information wasn’t what we have now and the global population was smaller with Europeans doing the most emigration3.

Contexts and intentions shift, and what is important today may not be important tomorrow. The ability to shift and adapt to contexts and intentions is something I agree should be a part of the future of the world – and by extension, artificial intelligence, though present iterations of AI are working toward centralizing when the world will require more decentralization to allow for the diversity needed to tackle hard problems.

John calls it the rise of the Age of Context, and maybe we can also add the Age of the Acknowledgement of the Diversity of Intentions. His is snappier, though.

- This is largely because the government of Trinidad and Tobago hasn’t gotten the Data Protection out or even made it an apparent priority, demonstrating a lack of understanding of the value of information. ↩︎

- Personally, I just shake my head at the whole thing because this should not be continuing, regardless of which side one believes is right. If you held a gun to my head, I’m on the side of the innocent civilians of both sides, then someone will say the civilians aren’t innocent, and it gets worse from there. So let’s not start that. ↩︎

- European emigration is fascinating by itself. ↩︎

Good insights: Thanks for providing good talking points. These will be useful in talking abut AI.